The Local Maxima Trap

Applied Divinity Studies asks: "Why don’t people quit their jobs at large tech companies?"[1]

We can explore this phenomenon through a machine learning analogy. I propose that human beings are awful at optimizing explore - exploit algorithms.[2]

Careers as Gradient Descent

A useful metaphor for our careers is a hill climbing algorithm.[3] Imagine that you are dropped at a random point in a hilly area. Your goal is to get to the top of the highest hill. The problem is, you can only see a few feet in front of you.

If you only move uphill, you risk getting stuck at a local maximum. You’ve gotten to the top of a hill, but there’s no way to know if it’s the highest one in the area. So there needs to be some element of randomness your steps – you need to take downhill steps in order to explore different hills in the terrain.

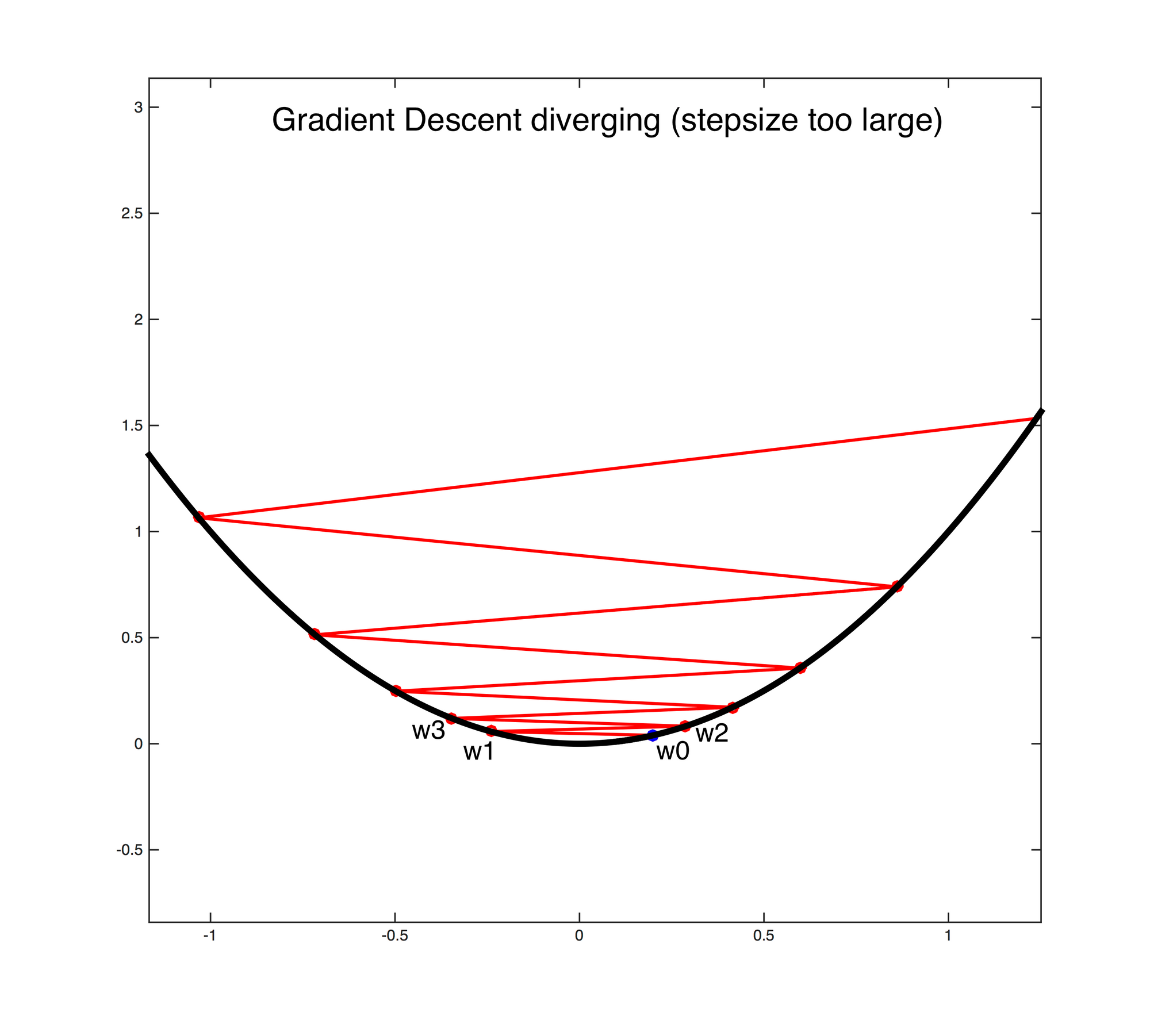

This hill-climbing metaphor for career exploration is similar to the way that a neural network “learns”. The key concept that we need to apply is alpha, or learning rate.[4] Learning rate describes the magnitude of updates that the network makes for each new data point encountered. This affects both how fast the algorithm learns and whether or not we actually reach a minimum.

To make this a bit more concrete, imagine a neural network that attempts to classify images of pieces of food:

- If the learning rate is 0, each new piece of information will not update our classifier at all. The network simply ignores all new data points.

- If the learning rate is 1, each new piece of information will be the only piece of information that the classifier takes into account. We’ve built a this photo of a hot dog vs. everything else classifier, which isn’t very interesting.

- If the learning rate is too low, the model will learn very slowly, though it will eventually be able to distinguish different types of food.

- If the learning rate is too high, the model will learn quickly. However, the model also runs the risk of having a high error rate because it overfits the most recent data. It “bounces” between different understandings of food, heavily influenced the most recent pieces of information it has encountered.

When setting up a machine learning model, people will often “tune” the parameter by trying out a few different learning rates to define the optimal rate for the particular algorithm. But we can’t run simulations of our careers and then choose the optimal learning rate after reviewing the results.

If someone has a career learning rate that is too high, they bounce around. They have tons of ideas for their career, maybe start new businesses, try to grow their skillset, but get partway through and then jump to the next endeavor. Conversely, if their learning rate is too low, they take too long to reach the global maximum. They may spend 30 years climbing a small foothill when the terrain contains the Rockies.

So why don’t people quit their job at large tech companies? They have the wrong learning rate for the neural net of their careers. They’ve become stuck at a local maximum.

Hazing as the Path to Brotherhood

“Whoever fights monsters should see to it that in the process he does not become a monster. And if you gaze long enough into an abyss, the abyss will gaze back into you.”

– Friedrich Nietzsche

A key element missing from this machine learning analogy is that our preferences change over time. Because most people experience loss aversion, setting out on a certain path makes it harder to explore other paths.

This strikes me as the strongest argument against the effective altruism concept of “earning to give”. In Doing Good Better, William MacAskill addresses this directly:

Another important consideration regarding earning to give is the risk of losing your values by working in an environment with people who aren’t as altruistically inclined as you are. For example, David Brooks, writing in The New York Times, makes this objection in response to a story of Jason Trigg, who is earning to give by working in finance:

You might start down this course seeing finance as a convenient means to realize your deepest commitment: fighting malaria. But the brain is a malleable organ. Every time you do an activity, or have a thought, you are changing a piece of yourself into something slightly different than it was before. Every hour you spend with others, you become more like the people around you.

Gradually, you become a different person. If there is a large gap between your daily conduct and your core commitment, you will become more like your daily activities and less attached to your original commitment.

This is an important concern, and if you think that a particular career will destroy your altruistic motivation, then you certainly shouldn’t pursue it. But there are reasons for thinking that this often isn’t too great a problem. First, if you pursue earning to give but find your altruistic motivation is waning, you always have the option of leaving and working for an organization that does good directly. At worst, you’ve built up good work experience. Second, if you involve yourself in the effective altruism community, then you can mitigate this concern: if you have many friends who are pursuing a similar path to you, and you’ve publicly stated your intentions to donate, then you’ll have strong support to ensure that you live up to your aims. Finally, there are many examples of people who have successfully pursued earning to give without losing their values…There is certainly a risk of losing one’s values by earning to give, which you should bear in mind when you’re thinking about your career options, but there are risks of becoming disillusioned whatever you choose to do, and the experience of seeing what effective donations can achieve can be immensely rewarding.

But how many fresh college graduates take a software engineering job at Google while “surrounding themselves with other members of the effective altruism community”? Most people lack MacAskill’s level of circumspection in making career decisions. They stumble into their life decisions rather than making them deliberately. And even for the most deliberate, life is made up more of things that happen to you than things that you control directly.

Once you start down a path, you take on the values of the people that you interact with on that path. My freshman year of college, many of my acquaintances went through a hellish hazing process when when pledging fraternities. In the moment, they said they hated the process. Yet the next year, they were hazing the next class of pledges.

Fraternity hazing prepares people for the bullshit jobs that they encounter after college. These experiences are meaningful precisely because of the wasted effort put into them. The more that we struggle for something, the more we value it.

You see this combination of increased opportunity cost and sunk cost fallacy in all sort of fields that attract ambitious people: finance, law, medicine. Exploration is explicitly discouraged in favor of exploiting the path in front of you. So without a clear plan to counteract these effects, your learning rate declines as you experience some modicum of success.

More broadly, exploration is encouraged for children but discouraged for adults. As a 20- or 25-year-old, it’s exciting to up and move to a new city and start a career in a new industry. Your friends may look askance at you if you do the same at 45.[5]

I’ve experienced this in my own career. As a fresh college graduate, I was in debt, yet turned down a six-figure investment banking job when my startup was funded to the tune of $35,000 by an accelerator in Santiago, Chile.[6] But today, with far more experience, money in the bank, and better business ideas, it’s harder for me to take the leap to be a full-time entrepreneur. My learning rate has declined and I’m less willing to deviate from the hill that I’m climbing.

This is essentially the conclusion that Applied Divinity Studies reaches:

Once you work at Google, you look around, see thousands of genuinely brilliant programmers who aren’t successful, and you get totally trapped. All of a sudden, you go from “I’m incredibly gifted and would do great things if only society wasn’t holding me back” to “there are literally 100 people within eyesight more gifted than me, and they’ve all settled for mediocre jobs, so I guess that’s the most I can hope for”.[7]

Escaping the Matrix

Can anything be done about this? Presumably, this is a net negative for society. People who could be creating value (and employment) by founding companies, pushing the frontiers of scientific knowledge, or casting into being great works of art are instead toiling away at yet another superfluous messaging product for Google.

As explored in the empirical data, many founders start out on the same path that leads to an engineering role at a large tech company. However, they bounce off of this path before their peers begin employment at these companies.

But rather than the selection effect that Applied Divinity Studies addresses – that founders don’t want to work for Google – this may be an example of the exact opposite selection effect. Namely, Google won’t employ the type of people that become founders.

Successful founders are intellectually curious about the world around them, both within and outside of their areas of expertise. They draw connections between seemingly disparate ideas – that is a large part of why they are able to create novel businesses. Steve Jobs bummed around India, experimented with LSD, and sat in on calligraphy classes as a college dropout. Patrick Collison reads about and has questions regarding nearly any topic imaginable. Elon Musk is driving humanity forward in the realms of energy, transportation, space travel, and artificial intelligence, but after graduating from Penn with a degree in physics couldn’t get a job at Netscape.

The best founders are exploring – they have a large alpha. Starting a company requires breadth of knowledge (to make connections) followed by depth into the specifics. In its hiring process, big tech selects only for depth, for those who have optimized for the big tech path.

Smaller, scrappier companies are more likely to tolerate exploration. After the startup that I founded, I applied to the big tech companies and didn’t get interviews. But DoorDash – at the time a 200-ish person company – was willing to take a chance on me.

All of this supports the conclusion that when Googlers do start companies, those companies perform disproportionately well.

So what does all of this mean?

In the optimization problem of your career, climbing one hill makes it easier to reach the top of other, higher hills. Working at Google is a great first step to starting a company – you gain legitimacy, meet potential cofounders, can save enough money to not have to worry about personal finances, etc. But paradoxically, frustratingly, the people most likely to make it to the top of this hill are the ones most likely to get stuck there. It’s not that future founders self-select away from big tech. Rather, big tech selects for the people who have optimized for the hill of big tech, not the ones who have explored enough to be successful founders.

Finally, if you work at a big tech company, it makes sense to increase your alpha periodically. Most career decisions are reversible; after your startup fails, you can go back to the Product Manager role at Facebook. But you only have one life. You won’t be able to re-run the simulation with a different learning rate once your career ends.

The key assumption here is that large tech company employees have not reached their global maxima.

Presumably, you have some values, and those values are not maxed out. They might be hedonic (your life is not as pleasurable as it could be), altruistic (the world is not as good as it could be), or narcissistic (your status is not as high as it could be).

For the small subset of Google, Facebook, etc. employees who “love your job more than anything you can possibly imagine doing instead”:- Congratulations

- You can ignore the rest of this post

Scott Young provides a good overview of this topic. ↩︎

Chris Dixon’s blog post from 2009 was my introduction to this concept. ↩︎

I tried to make this overview as simple as possible, but no simpler.

- I recognize that technically alpha is a hyperparameter, as opposed to a parameter.

- I referenced this Slate Star Codex post to help me explain alpha to a non-technical audience.

I’m convinced this is the reason adults struggle so much to learn new languages. Children aren’t afraid of looking stupid, so they are constantly babbling, are corrected in their language, and improve over time. Most adults are afraid to look like idiots when they speak (almost never the case, everyone I’ve encountered appreciates that you’re trying to speak their language), so they only get in a fraction of the reps. ↩︎

Startup Chile: a great experience when you’re 22, would never do it again. But that’s the topic for another post. ↩︎

Related: Smart people can convincingly rationalize nearly anything. ↩︎